Executive Summary↑

AI research is shifting from brute-force scaling toward architectural precision. Recent breakthroughs in Post-LayerNorm stability and activation steering suggest the industry's prioritizing inference reliability over raw parameter counts. This pivot directly affects enterprise margins by reducing compute waste and improving model uptime.

Specialized sectors like healthcare are demonstrating that precision beats general capability. Advances in hemodynamics surrogation for medical imaging and vision restoration show AI moving from digital chat to life-critical physical interventions. These high-stakes applications represent defensible market positions that generic LLMs can't easily disrupt through scale alone.

Capital should follow this transition from broad infrastructure to firms solving operational reliability. The next phase of growth depends on models that function predictably in messy, real-world environments rather than just pristine lab settings.

Continue Reading:

- Bandits in Flux: Adversarial Constraints in Dynamic Environments — arXiv

- HARMONI: Multimodal Personalization of Multi-User Human-Robot Interact... — arXiv

- Calibration without Ground Truth — arXiv

- RHSIA: Real-time Hemodynamics Surrogation for Non-idealized Intracrani... — arXiv

- Reflective Translation: Improving Low-Resource Machine Translation via... — arXiv

Research & Development↑

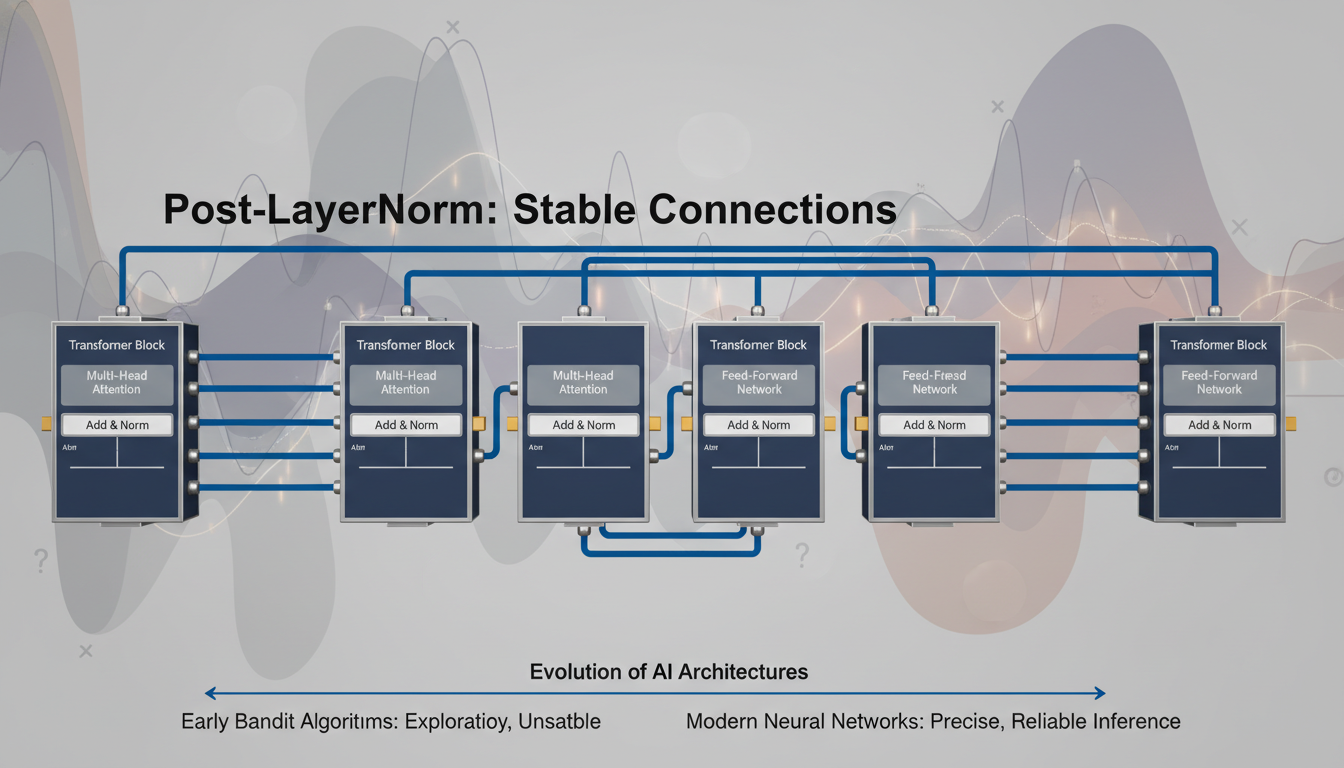

Companies are shifting focus from simply building larger models to making existing ones reliable enough for regulated industries. Post-LayerNorm Is Back demonstrates a return to foundational architecture to achieve stability in deep models. This matters because training stability translates directly to lower compute waste, a line item currently eating margins at every major lab.

Researchers are also finding ways to peek inside the black box. The work on Activation Steering identifies reasoning-critical neurons to improve inference reliability. For an investor, this suggests a path toward more predictable AI where we can nudge a model toward accuracy without retraining it from scratch. This type of surgical intervention could reduce the $0.01 per-query cost by minimizing redundant checks and error-correction loops.

The shift toward high-stakes physical applications is evident in RHSIA, which handles real-time blood flow simulations for brain aneurysms. Unlike the hallucination-prone world of creative AI, these surrogate models require extreme precision. Similarly, the HARMONI project tackles the multi-user personalization problem in robotics. Solving how a robot interacts with three different people in one room is a prerequisite for the domestic robotics market to move past $5.0B in annual sales.

The "data wall" remains a primary concern for scaling. Reflective Translation and new methods for Calibration without Ground Truth address the reality that we won't always have perfect data. If models can self-reflect to improve low-resource language tasks or estimate their own error rates without labels, the addressable market for AI expands into sectors where data is private or currently non-existent. These internal checks are the "insurance policy" needed for autonomous systems to operate without constant human oversight.

Continue Reading:

- HARMONI: Multimodal Personalization of Multi-User Human-Robot Interact... — arXiv

- Calibration without Ground Truth — arXiv

- RHSIA: Real-time Hemodynamics Surrogation for Non-idealized Intracrani... — arXiv

- Reflective Translation: Improving Low-Resource Machine Translation via... — arXiv

- Post-LayerNorm Is Back: Stable, ExpressivE, and Deep — arXiv

- Identifying and Transferring Reasoning-Critical Neurons: Improving LLM... — arXiv

- SONIC: Spectral Oriented Neural Invariant Convolutions — arXiv

Regulation & Policy↑

A new paper on arXiv titled Bandits in Flux addresses a technical hurdle that's quickly turning into a legal one. The research focuses on how AI agents handle adversarial constraints in shifting environments. It's a fundamental question for any company deploying automated systems in the real world where rules and risks don't stay static.

For the C-suite, this research signals a shift in what regulators expect from model audits. The EU AI Act and recent SEC inquiries already prioritize the ability of algorithms to withstand intentional manipulation or sudden shifts in data. If a model can't stay within its legal guardrails when an environment turns hostile, it won't pass the durability tests likely coming to finance and healthcare in 2025.

We're moving past the era where a model's performance in a vacuum is enough to satisfy a board or a regulator. This paper provides the mathematical groundwork for the kind of stress testing that will soon be a prerequisite for market entry. Companies that ignore these dynamic constraints now risk expensive retrofits later when the compliance mandates inevitably arrive.

Continue Reading:

Sources gathered by our internal agentic system. Article processed and written by Gemini 3.0 Pro (gemini-3-flash-preview).

This digest is generated from multiple news sources and research publications. Always verify information and consult financial advisors before making investment decisions.