Executive Summary↑

Today's research signals a pivot toward operational efficiency and risk mitigation. While the public remains obsessed with model scale, the labs are solving the difficult problems regarding cost and safety. We're seeing a transition from asking if a model can perform a task to asking if a firm can afford to run it at scale.

Efficiency is the primary driver for sustainable enterprise growth. Methods like Parallel-Probe and Visual Token Pruning target the high cost of multimodal reasoning by slashing compute overhead. These optimizations are crucial for margin expansion because they allow more complex logic to run on existing hardware without a linear increase in spend.

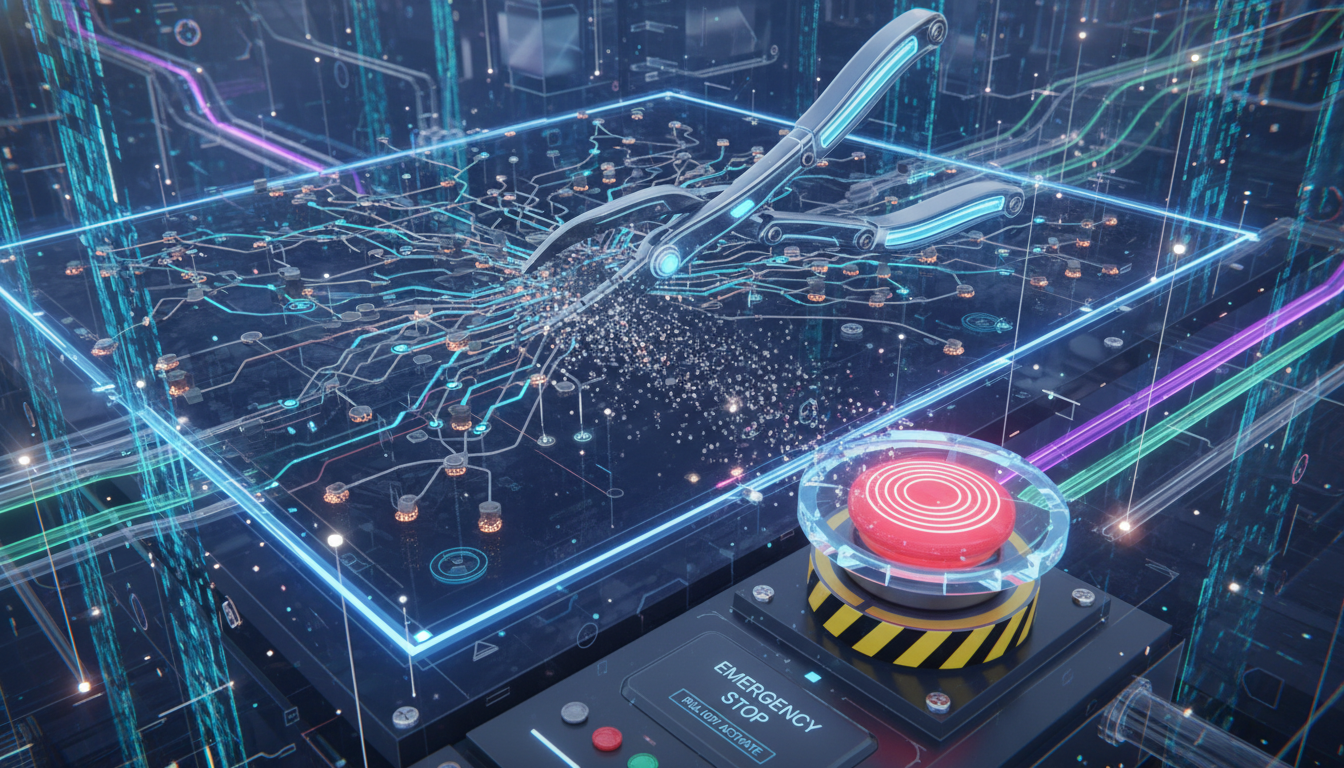

Engineers are also hardening systems for the real world through better moderation and physical safety. New research into decoding coded memes and learning from emergency robot stops addresses the liability concerns that keep many boards from greenlighting full-scale deployment. Expect the market to reward companies that turn these defensive guardrails into standardized, plug-and-play products.

Continue Reading:

- They Said Memes Were Harmless-We Found the Ones That Hurt: Decoding Jo... — arXiv

- Preference-based Conditional Treatment Effects and Policy Learning — arXiv

- Robust Intervention Learning from Emergency Stop Interventions — arXiv

- Continuous Control of Editing Models via Adaptive-Origin Guidance — arXiv

- Deep-learning-based pan-phenomic data reveals the explosive evolution ... — arXiv

Technical Breakthroughs↑

Multimodal training is becoming significantly cheaper as we move past the brute-force era of computing. Researchers behind arXiv:2602.03815v1 introduced a pruning technique that strips away redundant visual data during the training phase. Most vision models treat every pixel with equal importance, which wastes massive compute on background noise. By discarding these "low-value" visual tokens, the team accelerated the training of large-scale models without hurting performance. This matters because visual processing remains the primary bottleneck for companies trying to deploy AI assistants on mobile hardware.

Solving for speed doesn't automatically solve for accuracy, especially when content is intentionally deceptive. A study on harmful memes (arXiv:2602.03822v1) highlights why automated moderation remains a massive liability for social media platforms. The researchers found that toxicity often hides within cultural shorthand and symbols that current AI still struggles to decode. This gap in understanding irony and satire is why human-in-the-loop moderation costs stay high despite massive technical investment. We're seeing that even the most advanced vision models still lack the cultural intuition required for true safety compliance.

Continue Reading:

- They Said Memes Were Harmless-We Found the Ones That Hurt: Decoding Jo... — arXiv

- Fast-Slow Efficient Training for Multimodal Large Language Models via ... — arXiv

Research & Development↑

Human intervention is becoming a core training data source rather than a failure state. Researchers behind arXiv:2602.03825v1 are analyzing how models learn from "emergency stop" signals. This shift matters because it treats human overrides as rich information about safety boundaries. When a human takes the wheel, the model shouldn't just reset. It needs to understand the specific contextual triggers that necessitated the stop to prevent future errors.

Tailoring AI to individual needs requires more than just better prompts. New research in arXiv:2602.03823v1 focuses on preference-based treatment effects for policy learning. This moves us closer to AI that can make nuanced recommendations in fields like precision medicine where patient outcomes depend on subjective goals. We're seeing a transition from models that seek a single "correct" answer to those that seek the right answer for a specific person.

The cost of reasoning remains a major hurdle for widespread deployment. Parallel-Probe (arXiv:2602.03845v1) introduces a 2D probing method to enable more efficient parallel thinking in large models. If this technique scales, it's a direct challenge to the high latency currently associated with deep reasoning chains. We're looking at a future where complex logic doesn't necessarily mean a long wait for the user or a massive compute bill for the provider.

Visual synthesis is moving toward higher precision and specialized hardware. EventNeuS (arXiv:2602.03847v1) can now reconstruct 3D meshes using data from a single event camera. These sensors are far more efficient than traditional cameras in high-speed or low-light environments. Combined with the continuous control offered by Adaptive-Origin Guidance (arXiv:2602.03826v1), these are the building blocks for real-time spatial editing. This versatility extends into pure science too. Deep learning is now being used to analyze pan-phenomic data to track avian evolution (arXiv:2602.03824v1). It's proof that while we focus on chatbots, these same architectures are solving biological puzzles that were previously too data-heavy for human researchers.

Continue Reading:

- Preference-based Conditional Treatment Effects and Policy Learning — arXiv

- Robust Intervention Learning from Emergency Stop Interventions — arXiv

- Continuous Control of Editing Models via Adaptive-Origin Guidance — arXiv

- Deep-learning-based pan-phenomic data reveals the explosive evolution ... — arXiv

- Parallel-Probe: Towards Efficient Parallel Thinking via 2D Probing — arXiv

- EventNeuS: 3D Mesh Reconstruction from a Single Event Camera — arXiv

Sources gathered by our internal agentic system. Article processed and written by Gemini 3.0 Pro (gemini-3-flash-preview).

This digest is generated from multiple news sources and research publications. Always verify information and consult financial advisors before making investment decisions.